How can we speed up video processing? Parallel processing is the answer!

If you want to process a number of video files, it might take from minutes to hours, depending on the size of the video, frame count, and frame dimensions.

If you are processing images in batches, you can utilize the power of parallel processing and speed up the task.

In this post, we will look at how to use Python for parallel processing of videos.

We will read video from the disk, perform face detection, and write the video with output of face detection (bounding boxes) back to the disk.

Let’s get started.

Install Dependencies

We will need the following packages:

OpenCV: is a computer vision library that is commonly used. In this post, we will use OpenCV to read and write video files.

To install OpenCV on your device, you can use either pip command or apt-get command.

or

FFmpeg: is a cross-platform solution to record, convert, and stream audio and video. In this post, we will use FFmpeg to join multiple video files.

To install FFmpeg, use the following apt-get command:

Import Python Libraries

Let’s import the required Python libraries.

Details of libraries used:

cv2: OpenCV library to read from and write to video files.

time: to get the current time for calculating code execution time.

subprocess: to start new processes, connect to their input/output/error pipes, and obtain their return codes.

multiprocessing: to parallelize the execution of a function across multiple input values, distributing the input data across processes

xailient: library for face detection

Video Processing Pipeline Using Single Process

We will first define a method to process video using a single process. This is the way we would normally read a video file, process each frame, and write the output frames back to the disk.

Let’s create another function that calls the video processor, takes note of start and end times, and calculates the time taken to execute the pipeline and the frames processed per second.

Video Processing Pipeline Using Parallel Processing

Now, let’s define another function for processing video that utilizes multiprocessing.

The way the above function works is that the video processing job, which is normally done using one process, is now divided equally amongst the total number of processors available on the executing device.

If there are four processors, and the total number of frames in the video to be processed is 1,000, then each processor gets 250 frames to process, and these are executed in parallel. This means that each process will create a separate output file, and so when the video processing is completed, there are four different output videos.

To combine these output videos, we will use FFmpeg.

Create a pipeline to run the multiprocessing of video and calculate time to execute and frames processed per second.

Single Processing Versus Parallel Processing

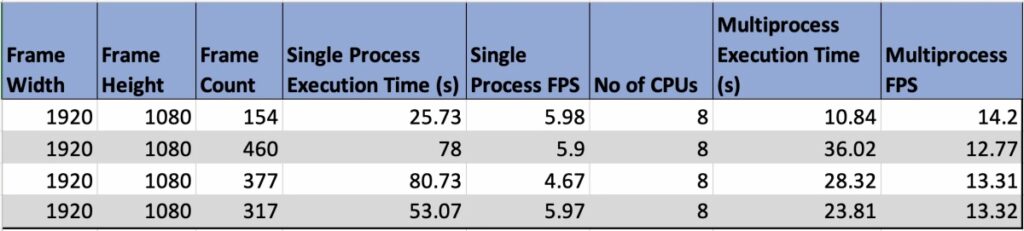

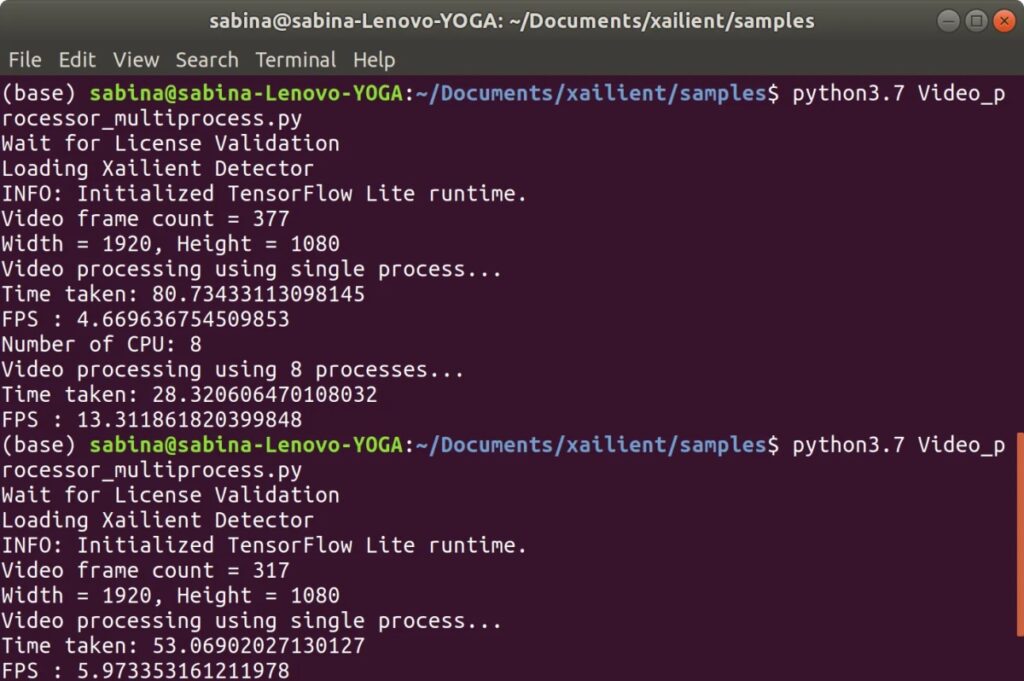

I ran this experiment on a Lenovo Yoga 920 with the Ubuntu18.04 operating system. The number of logical processors available on this device is eight.

From this experiment, we can observe 2x more frames being processed per second when using all cores to process the video, making parallel processing a far more efficient choice for your video processing needs.