All

Edge Computing

Xailient Detectum™ was created to fulfill the need for fast deep learning model inference at the Edge. Xailient with Movidius on Raspberry 3B+ gives you 70 fps with better software and an AI accelerator.

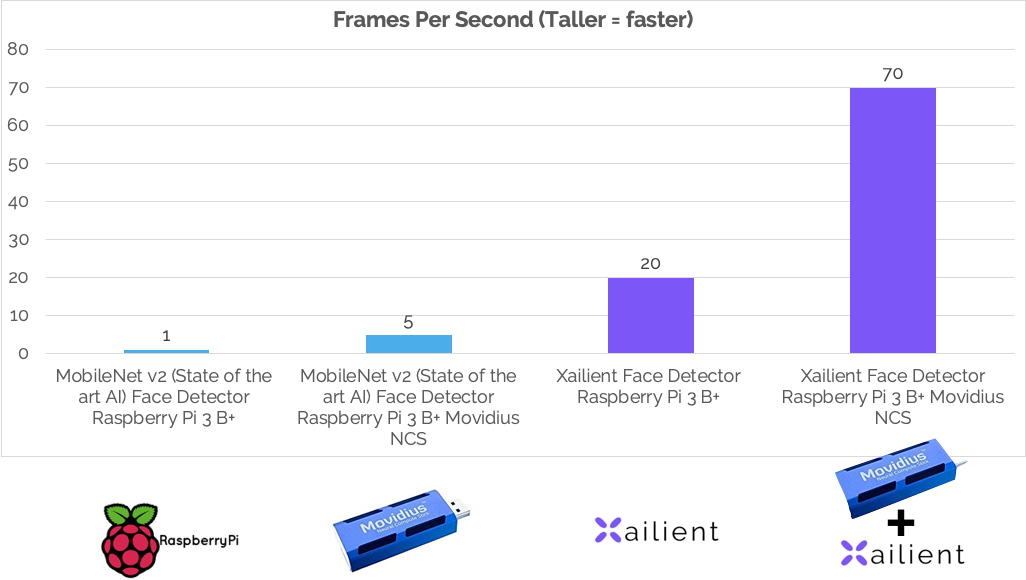

1Xailient outperforms the state-of-the-art MobileNet v2 model with and without the use of hardware accelerators.

2Xailient Detectum was created to fulfill the same real-time demands, making deep learning algorithms more efficient. The result is 4 times higher frame rate than the state-of-the-art MobileNet accelerated with the Movidius™ Neural Compute Stick.

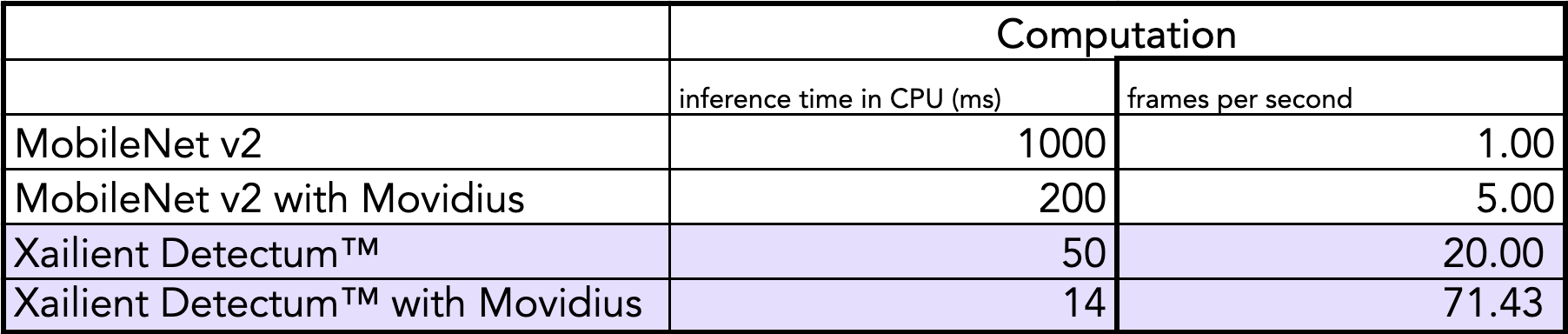

We trained MobileNet v2 and Xailient Detectum using an open-source training dataset to create face detectors. These face detectors perform both localization and classification of faces in an image (i.e., generate bounding box data).

These models provided the baseline of state-of-the-art Edge-optimized AI, and their performance was measured in terms of inference speed in frames per second on Raspberry Pi 3B+

Four different experiments were run: MobileNet v2 Face Detector without Movidius, MobileNet v2 Face Detector with Movidius, Xailient Face Detector without Movidius, and Xailient Face Detector with Movidius.

The Baseline MobileNet v2 without Movidius NCS had an inference speed of 1 frame per second, and MobileNet v2 with Movidius NCS had an inference speed of 5 frames per second. At the same time, the Xailient model processed 20 frames per second without the Movidius NCS and processed 70 frames per second with Movidius.

May 9, 2025

MACAU — May 9, 2025 — Xailient Inc., the world leader in privacy-safe computer vision AI, today announced the debut of a groundbreaking biometric innovation in partnership with global gaming leader Konami Gaming, Inc. At G2E Asia 2025, Konami will unveil SYNK Vision™ Tables, a new addition to its award-winning SYNKROS® casino management system, powered by Xailient’s privacy-preserving facial […]

September 27, 2024

by Newsdesk Fri 27 Sep 2024 at 05:39 Global industry supplier Konami Gaming is set to unveil new technology at Global Gaming Expo (G2E) in Las Vegas next month that brings its player facial recognition solution for Electronic Game Machines to table games. The expanded offering, in partnership with Xailient, follows the launch of SYNK Vision […]

We see things differently in the dynamic field of computer vision AI

All

Edge Computing

All

Casino and Gaming

We empower companies to bring computer vision AI products to market

faster and with less investment

You’ll get insights and resources into: