All

Edge Computing

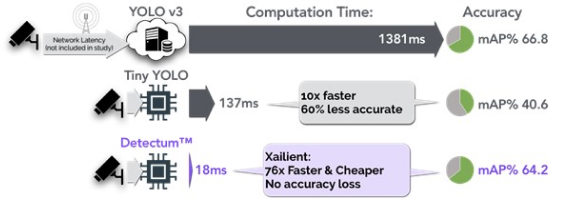

Xailient’s Detectum performs both Localization and Classification of objects in images and video and has been demonstrated to outperform the industry-leading YOLOv3 by 98.7%.

YOLO v3 is the leading AI architecture for computer vision. Traditionally, YOLO and object detection algorithms, in general, are run on Cloud infrastructure. Cloud costs, Bandwidth costs, and Network latency issues are driving industry to innovate ways to process Computer Vision at the Edge.

1Xailient has proven the Detectum software performs Computer Vision 98.7% more efficiently without losing accuracy.

2Customers can train the Detectum software with their training data, creating a unique solution.

3They can deploy it at the Edge, in the Cloud, or in a hybrid. This provides cost advantages and technical flexibility never before available to Computer Vision innovators.

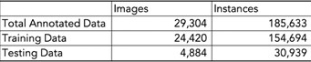

Using an open-source training dataset, Xailient trained both a traditional YOLO3 object detection neural network and a Tiny-YOLO using standard methods. These provided the baseline of state-of-the-art in both Cloud and Edge-optimized AI and measured the compromise in accuracy required in shrinking AI to fit at the Edge. The AI was trained to see cars, trucks, and pedestrians (the objects of interest).

Xailient trained 2 YOLO neural nets; a standard Cloud-based YOLO3 neural net and an Edge ready Tiny-YOLO neural net, and compared these against a Detectum neural net. All neural nets used the same training data and test data.

The images used in the test data were all different, with no data in common. The test data was not sequential frames from a related video.

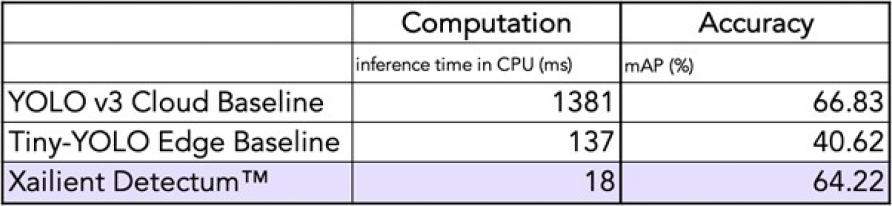

The hardware used was the same in all tests. All neural nets were run on Google Cloud Platform and fed pre-annotated test data as input. The neural nets were measured for accuracy (mAP) and performance (inference time). The Baseline YOLO3 Cloud Baseline had an accuracy of 66.8% mean average precision (mAP), and Tiny-YOLO Edge Baseline had an accuracy of 40.6%.

The results were unprecedented; Xailient achieved the same accuracy 76x faster than the Cloud Baseline and was 8x faster than the Edge Baseline without the accuracy penalty.

Xailient has proven a new way for Edge AI that does not require compromises in accuracy.

May 9, 2025

MACAU — May 9, 2025 — Xailient Inc., the world leader in privacy-safe computer vision AI, today announced the debut of a groundbreaking biometric innovation in partnership with global gaming leader Konami Gaming, Inc. At G2E Asia 2025, Konami will unveil SYNK Vision™ Tables, a new addition to its award-winning SYNKROS® casino management system, powered by Xailient’s privacy-preserving facial […]

September 27, 2024

by Newsdesk Fri 27 Sep 2024 at 05:39 Global industry supplier Konami Gaming is set to unveil new technology at Global Gaming Expo (G2E) in Las Vegas next month that brings its player facial recognition solution for Electronic Game Machines to table games. The expanded offering, in partnership with Xailient, follows the launch of SYNK Vision […]

We see things differently in the dynamic field of computer vision AI

All

Edge Computing

All

Casino and Gaming

We empower companies to bring computer vision AI products to market

faster and with less investment

You’ll get insights and resources into: