Data labeling is an essential step in a supervised machine learning task. The same is true for image annotation.

Data labeling and image annotations must work together to paint a complete picture. If you show a child a tomato and say it’s a potato, then the next time that child sees a tomato, it is very likely that they will classify it as a potato.

A machine learning model learns in a similar way, by looking at examples, and so the result of the model depends on the labels we feed in during its training phase.

‘Garbage In, Garbage Out’, is a phrase commonly used in the machine learning community, meaning the quality of the training data determines the quality of the model.

Data labeling is a task that requires a lot of manual work. If you can find a good open dataset for your project, that is labeled, then LUCK IS ON YOUR SIDE! But mostly, this is not the case. It is very likely that you will have to go through the process of data annotation by yourself.

In this post, we will look at the types of annotation, commonly used image annotation formats, and some tools that you can use for image data labeling.

Image Annotation Types

Before jumping into image annotations, it is useful to know about the different annotation types that exist so that you pick the right type for your use case.

Here are a few different types of annotations:

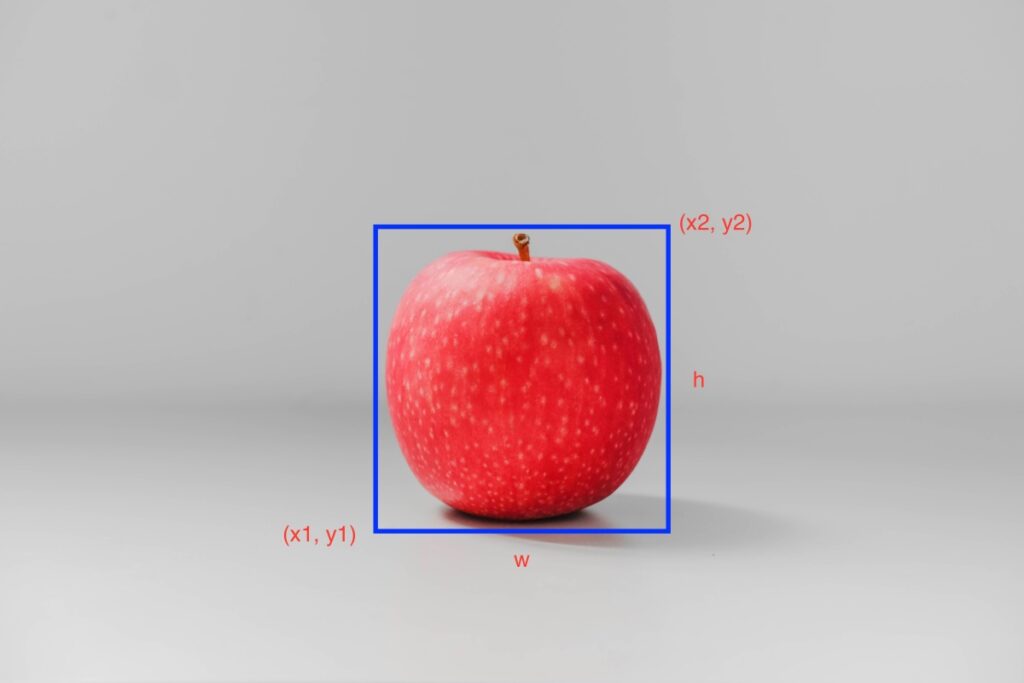

1. Bounding Boxes: Bounding boxes are the most commonly used type of annotation in computer vision. Bounding boxes are rectangular boxes used to define the location of the target object. They can be determined by the 𝑥 and 𝑦 axis coordinates in the upper-left corner and the 𝑥 and 𝑦 axis coordinates in the lower-right corner of the rectangle. Bounding boxes are generally used in object detection and localization tasks.

Bounding boxes are usually represented by either two co-ordinates (x1, y1) and (x2, y2) or by one co-ordinate (x1, y1) and width (w) and height (h) of the bounding box. (See image below)

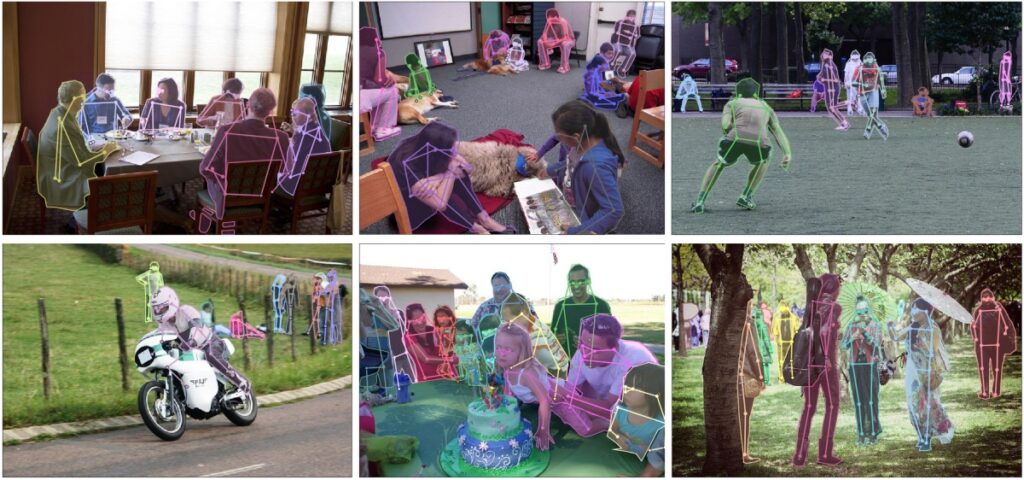

2. Polygonal Segmentation: Objects are not always rectangular in shape. With this idea, polygonal segmentation is another type of data annotation where complex polygons are used instead of rectangles to define the shape and location of the object in a much more precise way.

3. Semantic Segmentation: Semantic segmentation is a pixel-wise annotation, where every pixel in the image is assigned to a class. These classes could be pedestrian, car, bus, road, sidewalk, etc., and each pixel carries semantic meaning.

Semantic segmentation is primarily used in cases where environmental context is very important. For example, it is used in self-driving cars and robotics because it is important for the models to understand the environment they are operating in.

4. 3D Cuboids: 3D cuboids are similar to bounding boxes with additional depth information about the object. Thus, with 3D cuboids you can get a 3D representation of the object, allowing systems to distinguish features like volume and position in a 3D space.

A use-case of 3D cuboids is in self-driving cars where it can use the additional depth information to measure the distance of objects from the car.

5. Key-Point and Landmark: Key-point and landmark annotation is used to detect small objects and shape variations by creating dots across the image. This type of annotation is useful for detecting facial features, facial expressions, emotions, human body parts, and poses.

6. Lines and Splines: As the name suggests, this type is annotation is created by using lines and splines. It is commonly used in autonomous vehicles for lane detection and recognition.

Image Annotation Formats

There is no single standard format when it comes to image annotation. Below are a few commonly used annotation formats:

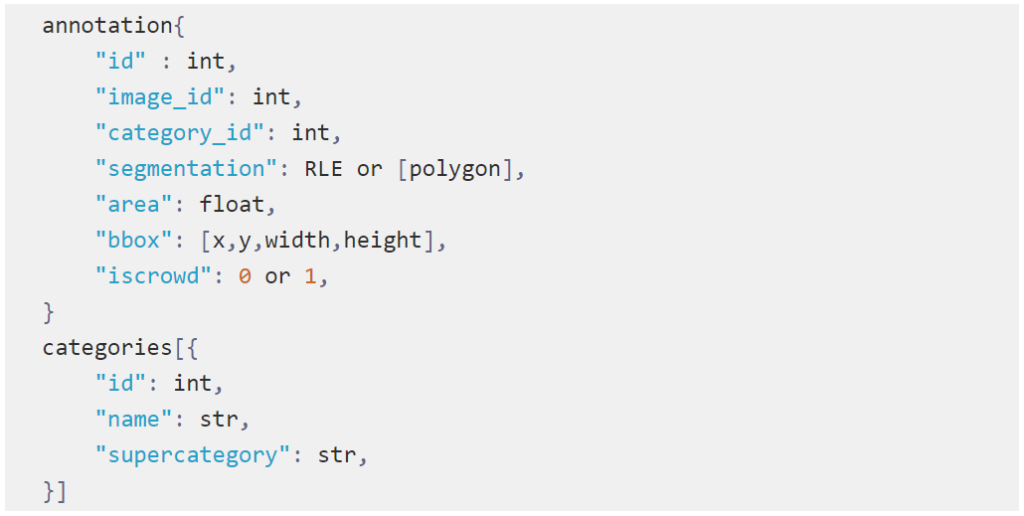

1. COCO: COCO has five annotation types: object detection, keypoint detection, stuff segmentation, panoptic segmentation, and image captioning. The annotations are stored using JSON.

For object detection, COCO follows the following format:

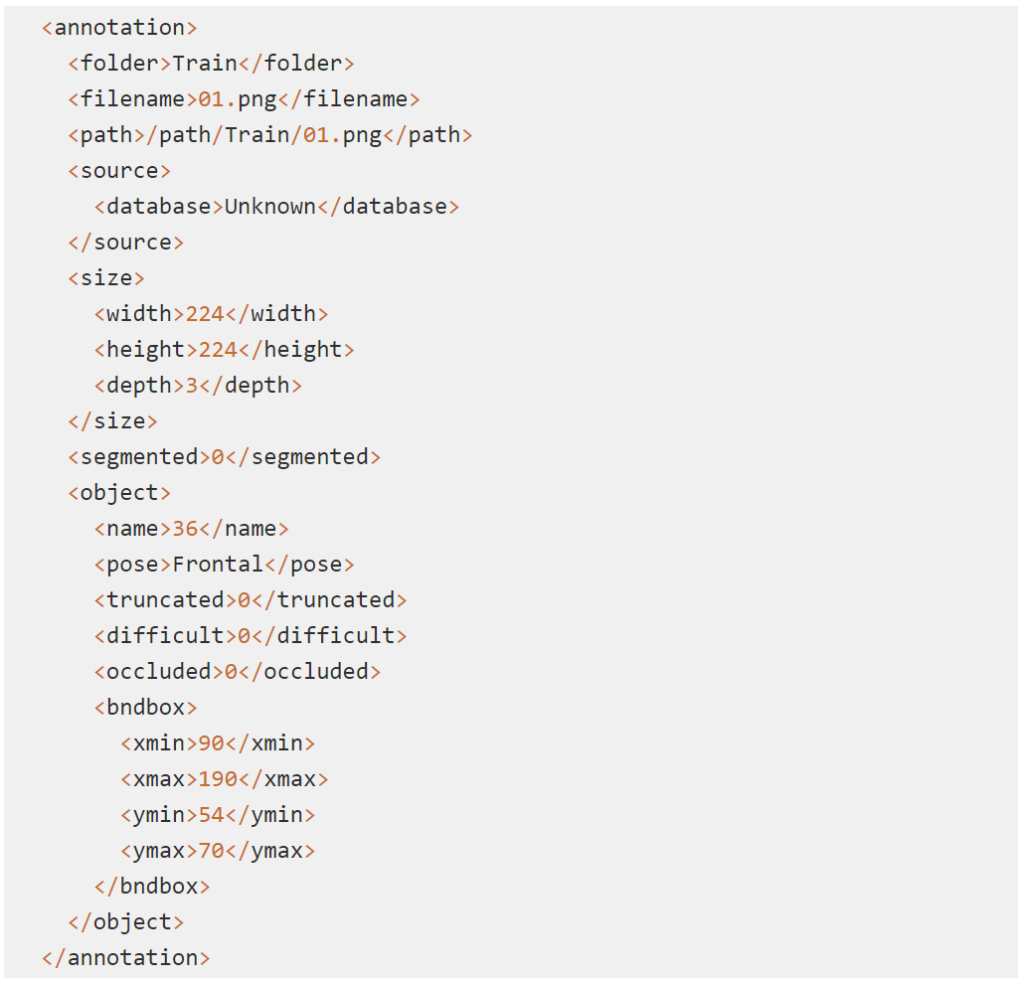

2. Pascal VOC: Pascal VOC stores annotations in an XML file. Below is an example of a Pascal VOC annotation file for object detection.

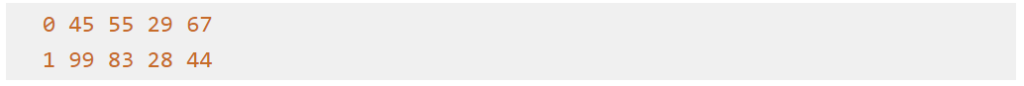

3.YOLO: In the YOLO labeling format, a .txt file with the same name is created for each image file in the same directory. Each .txt file contains the annotations for the corresponding image file, including its object class, object coordinates, height, and width.

For each object, a new line is created.

Below is an example of annotation in YOLO format where the image contains two different objects.

Image Annotation Tools

Here is a list of tools that you can use for annotating images:

- MakeSense.AI

- LabelImg

- VGG image annotator

- LabelMe

- Scalable

- RectLabel

Summary

In this post, we covered what data annotation/labeling is and why it is important for machine learning. We looked at six different types of annotations for images: bounding boxes, polygonal segmentation, semantic segmentation, 3D cuboids, key-point and landmark, and lines and splines, and three different annotation formats: COCO, Pascal VOC, and YOLO. We also listed a few image annotation tools that are available.

In the next post, we will cover how to annotate image data in detail.