“Edge computing market size is expected to reach USD 29 billion by 2025”

In this decade there has been a transformational movement from on-premise computing to cloud computing, allowing systems to be centralized and made accessible and increasing security and collaboration. Today, at the precipice of a new decade, we are witnessing a shift from cloud computing to real-time edge computing.

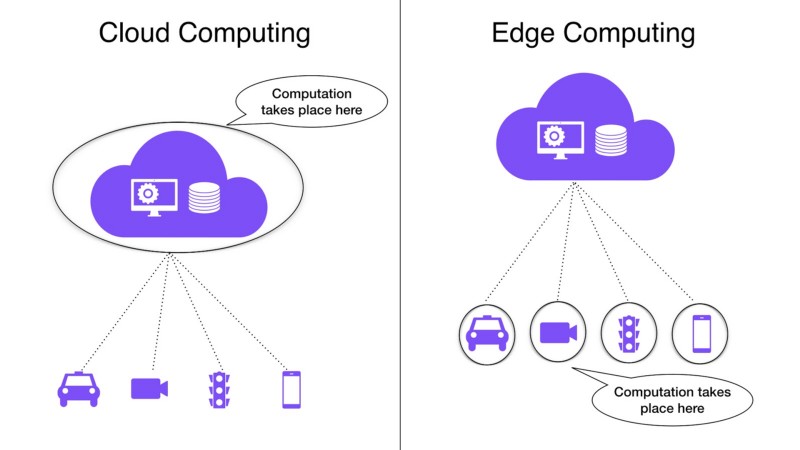

Edge Computing refers to the computations that take place at the ‘outside edge’ of the internet, as opposed to cloud computing, where computation happens at a central location. Edge computing typically executes close to the data source, for example onboard or adjacent to a connected camera.

A self-driving car is a perfect example of edge computing. In order for cars to drive down any road safely, they must observe the road in real-time and stop if a person walks in front of the car. In such a case, processing visual information and making a decision is done at the edge, using edge computing.

This example highlights one key motivation for edge computing — speed. Centralized cloud systems provide ease of access and collaboration, but the centralization of servers means they are remote from data sources. Data transmission introduces delays caused by network latency. For a self-driving car, it is critical to have the shortest possible time from collecting data through sensors to making a decision and then acting on it.

Edge computing market size is expected to reach USD 29 billion by 2025. — Grand View Research

All such real-time applications demand edge computing. A Market Research Future (MRFR) study shows that by 2024, the market size of edge computing is expected to reach USD 22.4 billion, and USD 29 billion by 2025 according to Grand View Research, Inc.

Top tier VCs like Andreessen Horowitz are making big bets. Edge computing is already being used in various applications ranging from self-driving cars and drones to home automation systems and retail, and with this rate of its popularity, we can only imagine its future applications.

Why Move from Cloud Computing to Real-Time Edge Computing?

1. Speed

In cloud computing, edge devices collect data and send it to the cloud for further processing and analysis. Devices at the edge play a limited role, sending raw information to, and receiving processed information from, the cloud. All of the real work is done in the cloud.

This type of infrastructure may be suitable for applications where users can afford to wait for 2 or 3 seconds to get a response. However, this is unsuitable for applications that need to respond faster, and especially those which are looking for real-time action such as in a self-driving car.

But even in a more mundane example, like basic website interactivity, developers deploy JavaScript to detect a user’s action and respond to it in the user’s browser. Where the response time is critical to the success of an application, interpreting input data close to the source is the better option if it is available.

When both input and output happen at the same location, such as in an IoT device, edge computing removes the network latency, and real-time becomes possible.

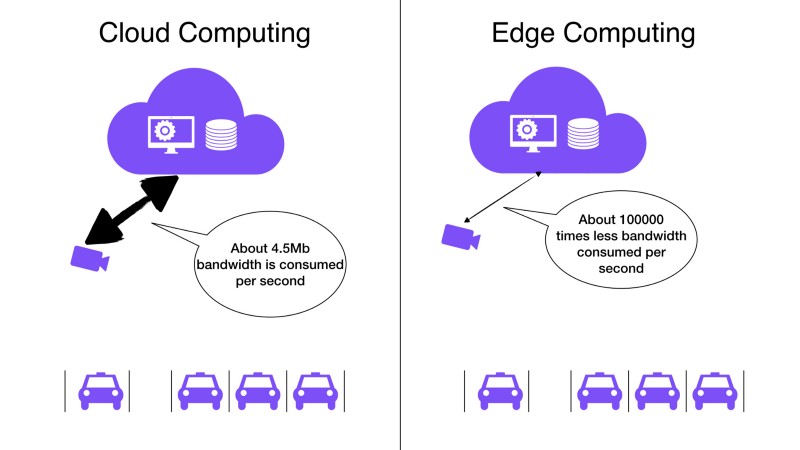

2. Bandwidth

Computing at the edge means less data transmission as most of the heavy-lifting is done by the edge devices. Instead of sending the raw data to the cloud, most of the processing is done at the edge, and only the result is sent to the cloud, thus requiring less data transmission bandwidth.

Let’s take an example of a smart parking system that uses cloud computing infrastructure to find out how many parking spaces are available. Live video feed or still images of, let’s say, 1080p every few seconds may be sent to the cloud. Imagine the network bandwidth and data transmission cost required every hour for this solution, with continuous transmission of voluminous raw data over the network:

In comparison, if the smart parking system uses edge computing, it would only have to send an integer of how many parking spaces are available every few seconds to the cloud, thus resulting in reduced bandwidth, and leading to reduced data transmission cost.

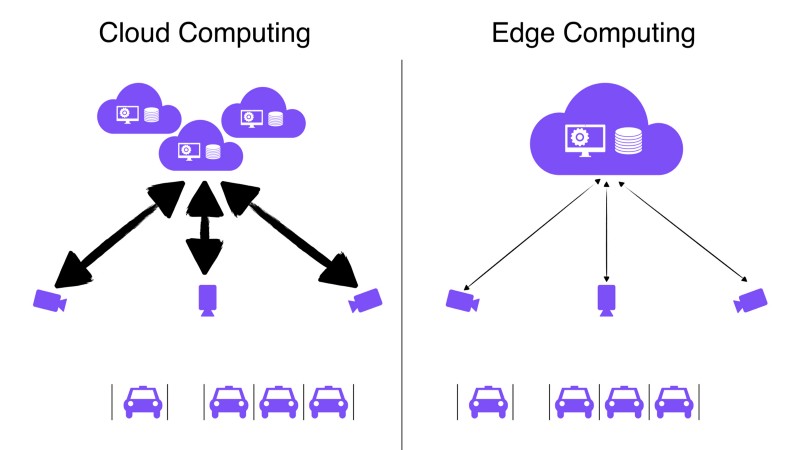

3. Scale

IoT means more systems, more systems mean more bandwidth and more load on centralized servers. In cloud computing architecture, adding one IoT device results in an increase in bandwidth requirement and cloud computing power.

Let’s use the above example of the smart parking system, streaming live 1080p video feed to the cloud.

The customer wants to install this system in 10 additional parking lots. In order to facilitate this, they need to increase their network bandwidth and need approximately 10 times more computing power and cloud storage space as the load on the centralized servers increases with incoming data from 10 additional cameras.

This increases network traffic and the uplink bandwidth becomes a bottleneck. Thus, scaling is costly as the network traffic, bandwidth and use of cloud resources increase with every additional device.

In contrast, in edge computing, adding one IoT means per unit cost of the device increases. Bandwidth and cloud computing power do not need to increase per device, as most of the processing is done at the edge.

With edge computing architecture, adding 10 additional IoT devices in the parking lot system seems less daunting because there is no need to increase the cloud computing power or network bandwidth. Therefore, edge computing architecture is much more scalable.

From on-premise computing to cloud computing and now to edge computing, software architectures have evolved as we demand better performance and more innovation from our computing systems.

As we outgrow our current cloud-based architectures, the market of edge computing is growing driven by demand for real-time applications and the cost pressures of IoT, among other factors. This is a trend that will color the software industry for the 2020s.

Welcome to the future.