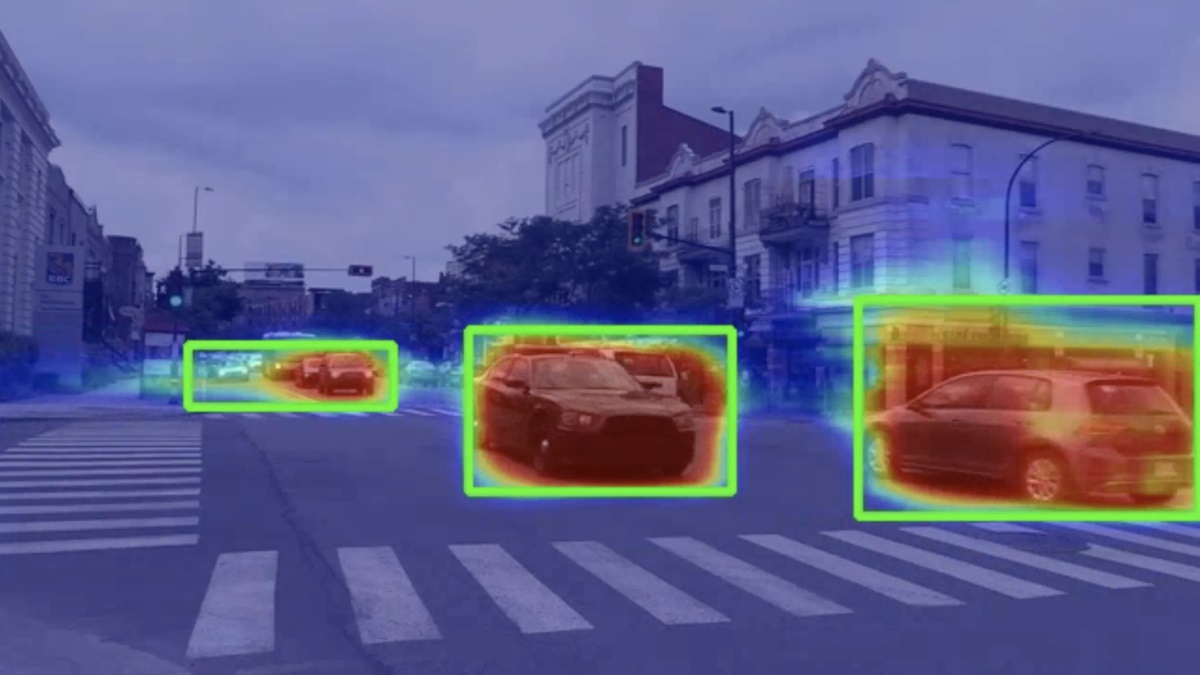

From vehicle counting and smart parking systems to advanced or autonomous driving assistant systems (ADAS), the demand for real-time vehicle detection – detecting cars, buses, and motorbikes is increasing and soon will be as common an application as face detection.

And of course, these systems need to run in real-time to be usable in most real-world applications. Because who will rely on an autonomous driving assistant, if it cannot detect cars while driving?

In this post, I will show you how you can implement your own real-time vehicle detection system using pre-trained models that are available for download: MobileNet SSD and Xailient Car Detector.

Before diving deep into the implementation, let’s get a bit more familiar with these models. But feel free to skip to the code and results if you wish.

MobileNet SSD

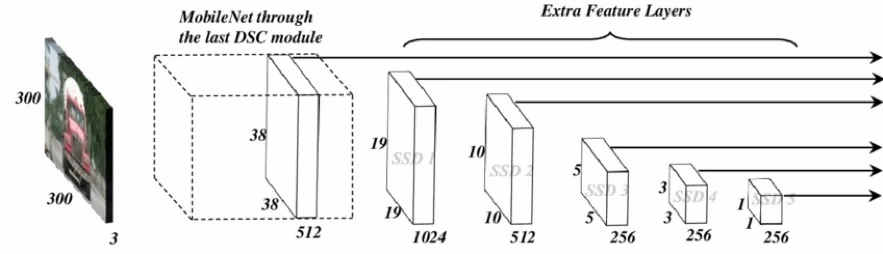

MobileNet is a lightweight deep neural network architecture designed for mobiles and embedded vision applications.

In many real-world applications, such as a self-driving car, the recognition tasks need to be carried out in a timely fashion on a computationally limited device. To fulfill this requirement, MobileNet was developed in 2017.

The core layers of MobileNet are built on depth-wise separable filters. The first layer, which is a full convolution, is an exception.

To learn more about MobileNet, please refer to: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications.

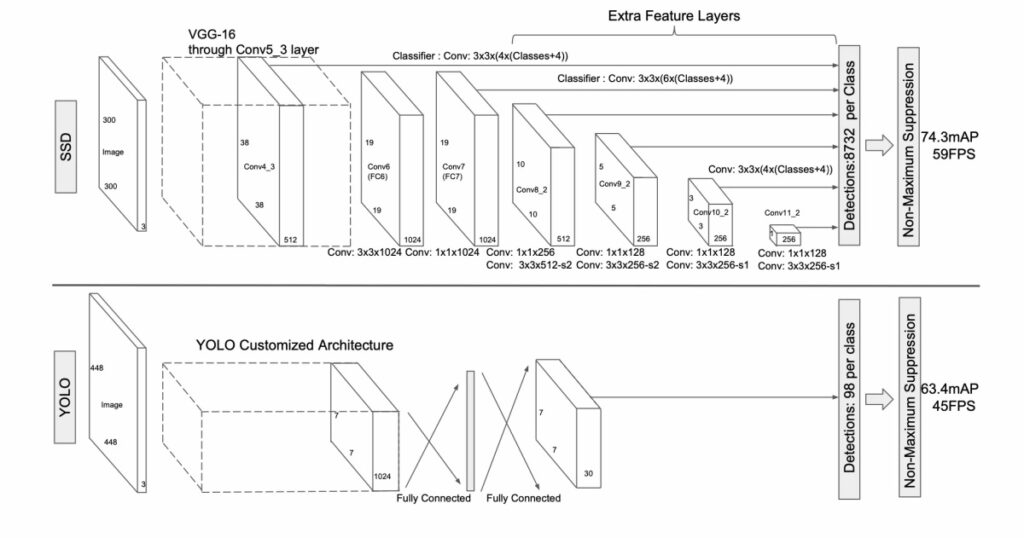

Around the same time (2016), SSD, or single shot detector, was also developed by the Google Research team to cater to the need for models that can run real-time on embedded devices without a significant trade-off in accuracy.

Single shot object detection or SSD takes one single shot to detect multiple objects within the image. The SSD approach is based on a feed-forward convolutional network that produces a fixed-size collection of bounding boxes and scores for the presence of object class instances in those boxes.

It’s composed of two parts:

- Extract feature maps, and

- Apply convolution filter to detect objects

SSD is designed to be independent of the base network, and so it can run on top of any base networks, such as VGG, YOLO, or MobileNet.

In the original paper, Wei Liu and team used VGG-16 network as the base to extract feature maps.

To learn more about SSD, please refer to: SSD: Single Shot MultiBox Detector.

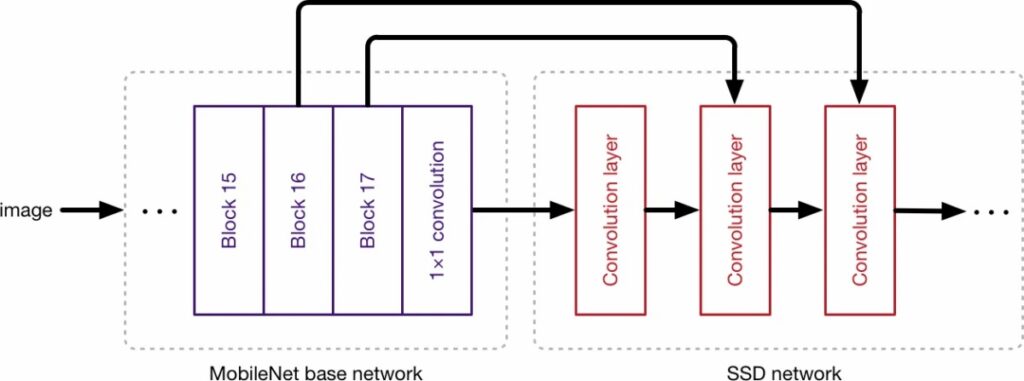

To further tackle the practical limitations of running high-resource and power-consuming neural networks on low-end devices in real-time applications, MobileNet was integrated into the SSD framework. So, when MobileNet is used as the base network in the SSD, it became MobileNet SSD.

The MobileNet SSD method was first trained on the COCO dataset and was then fine-tuned on PASCAL VOC reaching 72.7% mean average precision (MAP).

MobileSSD for Real-Time Vehicle Detection

Step 1: Download the Pre-Trained MobileNetSSD Caffe Model and prototxt.

We’ll use a MobileNet pre-trained model downloaded from https://github.com/chuanqi305/MobileNet-SSD/ that was trained in the Caffe-SSD framework.

Download the pre-trained MobileNet SSD model and prototxt from MobileNet SSD.

MobileNetSSD_deploy.caffemodel

MobileNetSSD_deploy.prototxt

Step 2: Implement Code to Use MobileNet SSD

Because we want to use it for a real-time application, let’s calculate the frames it processes per second as well.

(Parts of this code were inspired by the PyImageSearch blog.)

Experiments:

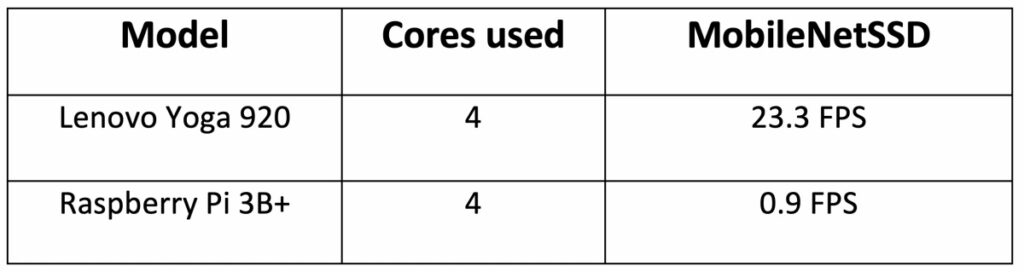

I ran the above code on two different devices:

- On my dev machine, which is a Lenovo Yoga 920 with the Ubuntu18.04 operating system.

- On a low-cost, resource-constrained device, which is a Raspberry Pi 3B+ with the Raspbian Buster operating system.

Results:

On my dev machine, the Lenovo Yoga, with MobileNet SSD, I got an inference speed of 23.3 FPS and when I ran RaspberryPi 3B+, the inference speed was 0.9 FPS, using all four cores.

Pretty dramatic. This experiment shows that if you have a powerful device to run the MobileNetSSD, it performs well and will serve the real-time requirement. But if your application is targeted to be deployed on a computationally limited IoT/embedded device such as the Raspberry Pi, this does not seem to be a good fit for a real-time application.

Xailient

Xailient’s vehicle detection model uses a selective attention approach to perform detection. It is inspired by the working mechanism of the human eye.

Xailient models are optimized to run on low-power devices that are memory and resource-constrained.

Now let’s see how Xailient’s pre-trained car detector performs.

Xailient Car Detector for Real-Time Car Detection

Step-1: Download the pre-trained car detector model.

We’ll use Xailient’s pre-trained car detector model downloaded from the Xailient library.

Step 2: Implement code to use Xailient car detector mode

Experiments:

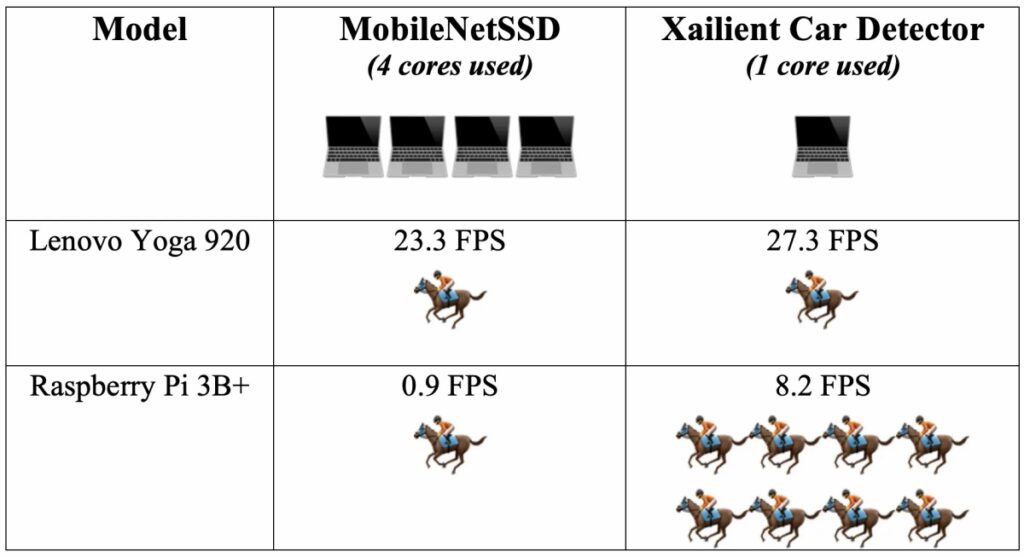

I ran the above code on the same two sets of devices:

- On my dev machine, which is a Lenovo Yoga 920 with the Ubuntu18.04 operating system.

- On a low-cost, resource-constrained device, which is a Raspberry Pi 3B+ with the Raspbian Buster operating system.

Results:

On the dev machine, there is a slight improvement on the inference speed when using Xailient Car Detector, even when only one core is used. On the Raspberry Pi, however, Xailient processes 8x more frames per second with a single core.

Summarizing the results of both models:

The video I used for this experiment was downloaded from Pexels.com

In this post, we looked at the need for real-time detection models, briefly introduced MobileNet, SSD, MobileNetSSD, and Xailient, all of which were developed to solve the same challenge: to run detection models on low-powered, resource-constrained IoT/embedded devices with the right balance of speed and accuracy.

We used pre-trained MobileNetSSD and Xailient car detector models and performed experiments on two separate devices: a dev machine and a low-cost IoT device.

The results show a slight improvement in the speed of the Xailient Car detector over MobileNetSSD in the dev machine and a significant improvement in the low-cost IoT device, even when only one core was used.

If you want to perform real-time vehicle detection, and then extend your car detection application to car tracking and speed estimation, PyImageSearch has a very good blog post called OpenCV Vehicle Detection, Tracking, and Speed Estimation.